Summary

Table of Content

Transformer-Optimized AI Chip Market

Get a free sample of this report

Form submitted successfully!

Error submitting form. Please try again.

Thank you!

Your inquiry has been received. Our team will reach out to you with the required details via email. To ensure that you don't miss their response, kindly remember to check your spam folder as well!

Request Sectional Data

Thank you!

Your inquiry has been received. Our team will reach out to you with the required details via email. To ensure that you don't miss their response, kindly remember to check your spam folder as well!

Form submitted successfully!

Error submitting form. Please try again.

Transformer-Optimized AI Chip Market Size

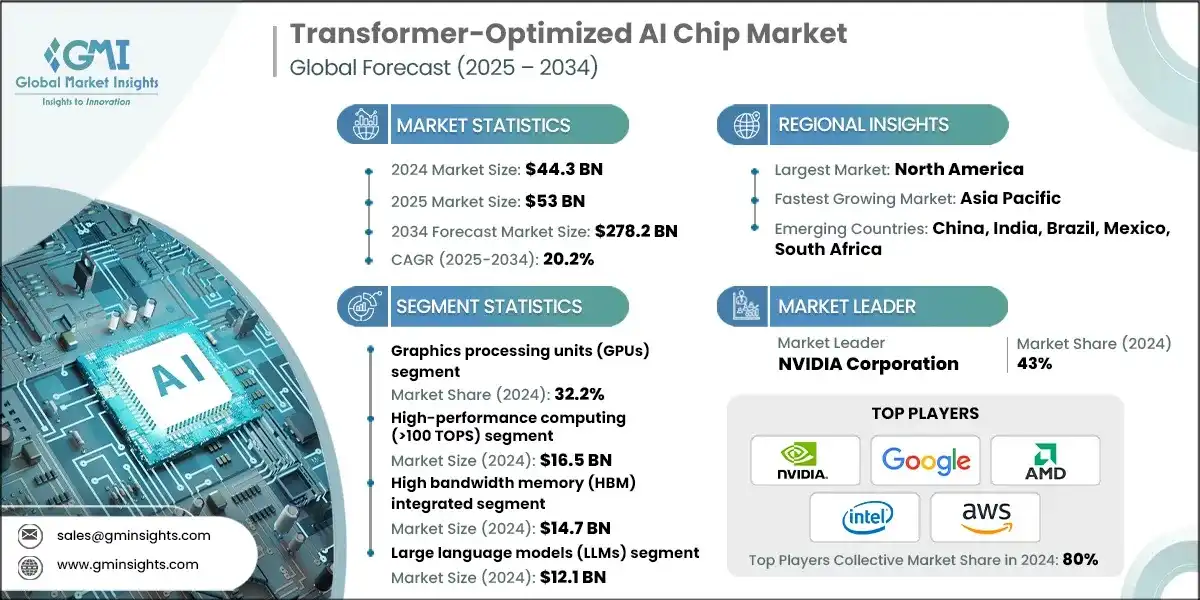

The global transformer-optimized AI chip market was valued at USD 44.3 billion in 2024. The market is expected to grow from USD 53 billion in 2025 to USD 278.2 billion in 2034, at a CAGR of 20.2% during the forecast period according to the latest report published by Global Market Insights Inc.

To get key market trends

The transformer-optimized AI chip market is gaining momentum as demand rises for specialized hardware capable of accelerating transformer-based models and large language models (LLMs). The demand for these chips is growing in AI-training & inference environments where throughput, low-latency, & energy efficiency are set as priority. The shift toward domain-specific architectures adopting transformer-optimized compute units, high-bandwidth memory, and optimized interconnects is driving the adoption of these chips in next-generation AI use cases.

For example, Intel Corporation's Gaudi 3 AI accelerator is purpose-built for transformer-based workloads & is equipped with 128 GB of HBM2e memory & 3.7 TB/s memory bandwidth, which gives it the ability to train large language models more quickly and to keep inference latency lower. This capability continues to promote adoption in cloud-based AI data centers and enterprise AI platforms.

Industries such as cloud computing, autonomous systems, and edge AI are quickly adopting transformer-optimized chips to support real-time analytics, generative AI, and multi-modal AI applications. For example, NVIDIA’s H100 Tensor Core GPU has developed optimizations specific to transformers, including efficient self-attention operations and memory hierarchy improvements, so enterprises can deploy large-scale transformer models using faster processing rates and less energy.

This growth is aided by the emergence of domain-specific accelerators and chiplet integration strategies which combine multiple dies and high-speed interconnects to scale transformer performance efficiently. In fact, the start-up Etched.ai Inc. announced that it is working on a Sohu transformer-only ASIC for 2024 that is optimized for inference on transformer workloads, is indicative that there is a move towards highly specialized hardware for AI workloads. Emerging packaging and memory hierarchy improvements are shifting the market towards less chip latency & more densities to allow faster transformers to run in nearby proximity to the compute units.

For example, Intel’s Gaudi 3 combines multi-die HBM memory stacks and innovative chiplet interconnect technology to drive resilient transformer training & inference at scale - demonstrating that hardware-software co-optimization enables better transformers with lower operational expenses.

These advances are acting to expand the use cases for transformer-optimized AI chips across high-performance cloud, edge AI, and distributed computing spaces and can propel market growth and scalable deployment across enterprise, industrial, and AI research use cases.

Transformer-Optimized AI Chip Market Report Attributes

| Key Takeaway | Details |

|---|---|

| Market Size & Growth | |

| Base Year | 2024 |

| Market Size in 2024 | USD 44.3 Billion |

| Market Size in 2025 | USD 53 Billion |

| Forecast Period 2025 - 2034 CAGR | 20.2% |

| Market Size in 2034 | USD 278.2 Billion |

| Key Market Trends | |

| Drivers | Impact |

| Rising demand for LLMs and transformer architectures | Rising demand for LLMs and transformer architectures drives approximately 30–35% of market growth, as specialized chips accelerate large language model training and inference. Their optimized compute and memory access patterns enable enterprises to deploy generative AI, multi-modal AI, and NLP applications efficiently. |

| Rapid growth of AI training and inference workloads | Rapid growth of AI training and inference workloads contributes roughly 25–30% of market expansion, due to the need for high-bandwidth, low-latency hardware. Transformer-optimized chips enhance performance for cloud AI data centers, accelerating model convergence and reducing overall computer costs. |

| Domain-specific accelerators for transformer compute | Domain-specific accelerators for transformer compute account for around 20–25% of market impact, as these chips leverage self-attention optimization and transformer-specific operations. This improves throughput and energy efficiency for inference and training workloads in enterprise and research applications. |

| Edge and distributed transformer deployment | Edge and distributed transformer deployment supports about 15–20% of market growth, driven by compact, energy-efficient chips that enable transformer AI workloads on edge devices. Use cases include real-time analytics, autonomous systems, and IoT-based AI inferencing in latency-sensitive environments. |

| Advanced packaging and memory-hierarchy innovations | Advanced packaging and memory-hierarchy innovations influence roughly 10–15% of market growth, by enabling high-density, low-latency compute near memory. Chiplet integration and high-bandwidth memory stacks optimize transformer execution, lowering operational costs and improving AI model scalability. |

| Pitfalls & Challenges | Impact |

| High Development Costs and R&D Complexity | Developing transformer-optimized chips requires advanced design, specialized architectures, and cutting-edge fabrication, driving high R&D and prototyping costs. This limits entry for smaller players and slows adoption, impacting roughly 20–25% of potential market growth. |

| Thermal Management and Power Efficiency Constraints | High-performance transformer chips generate significant heat and consume substantial power. Efficient cooling and power optimization remain critical, with inefficiencies potentially reducing deployment in edge and high-density data center environments, affecting around 15–20% of market scalability. |

| Opportunities: | Impact |

| Expansion into Large Language Models (LLMs) and Generative AI | Increasing adoption of LLMs and generative AI applications is driving demand for specialized transformer chips. This presents a growth potential of 30–35% as enterprises and cloud providers invest in hardware optimized for high-throughput AI workloads. |

| Edge and Distributed AI Deployment | Deployment of transformer models on edge devices and distributed computing platforms creates demand for compact, energy-efficient chips. This opportunity could impact 20–25% of market expansion by enabling low-latency AI inference in autonomous systems, IoT, and industrial applications. |

| Market Leaders (2024) | |

| Market Leaders |

43% market share |

| Top Players |

Collective market share in 2024 is 80% |

| Competitive Edge |

|

| Regional Insights | |

| Largest Market | North America |

| Fastest Growing Market | Asia Pacific |

| Emerging Country | China, India, Brazil, Mexico, South Africa |

| Future Outlook |

|

What are the growth opportunities in this market?

Transformer-Optimized AI Chip Market Trends

- One major trend is shifting to AI accelerators targeted at a specific domain, namely chip hardware specifically optimized for large language models and transformer architectures. Companies are designing chips that merge high-bandwidth memory, dedicated self-attention processing units, and low-latency interconnects to leverage transformer performance. As noted, this trend caters to the needs of data-center and cloud-based AI systems due to its ability to provide faster training, real-time inferences, and improved energy efficiencies.

- With this capability, the transformer-optimized AI chips are being hailed not only as general-purpose AI accelerators, but as important core hardware for managing high-throughput transformer workloads. Cloud computing, autonomous systems, and edge AI industries have also started adopting these systems for low-latency inference, multi-modal AI applications, and generative use cases. For example, the NVIDIA H100 GPU implements optimizations for transformers and can even allow for the large-scale deployment of large language models.

- In the area of AI and edge deployments, these chips are starting to supplant the function of legacy GPUs in specific transformer workloads which commoditize their use in high-growth verticals' technology stacks (i.e. cloud AI platforms, autonomous vehicles, industrial AI). There is also a separate trend around chiplet composition, memory hierarchy, and multi-die packages which can yield higher throughput, lower latency, and enhanced thermal efficiency. One example of the latter would be Intel's Gaudi 3 accelerator, which uses multi-die HBM (_high-bandwidth memory_) stacks and chiplet interconnects, to achieve not only higher memory bandwidth, but higher transformer performance.

- As these changes are scaled and matured, power efficiency and computing density are improving, enabling transformer-optimized chips to scale across edge-connected devices, distributed AI systems, and high-performance data centers. There are increasing real-time deployments, particularly in AI workloads involving real-time analytics, generative AI, & inference of large language models. For example, Google's TPU v5 is being introduced with memory improvements and systolic array configurations, efficiently scaling transformer workloads.

- These chips are driving the next generation of AI applications at the edge, in the cloud, and within distributed computing using high-bandwidth, low-latency, and energy-efficient execution for transformer workloads. This trend expands the available addressable market for transformer-optimized AI hardware and establishes a role as an important enabler within AI-driven computing architectures.

- There seems to be a trend toward smaller, low-energy chips for edge transformer deployments, with some of the evidence being low power limits, high memory bandwidth, & real-time inference. For example, startups like Etched.ai are optimizing for inference-only transformer ASICs geared towards edge and distributed AI systems, emphasizing this transitional direction toward specialized low-power hardware.

Transformer-Optimized AI Chip Market Analysis

Learn more about the key segments shaping this market

Based on the chip type, the market is divided into neural processing units (NPUs), graphics processing units (GPUs), tensor processing units (TPUs), application-specific integrated circuits (ASICs) and Field-programmable gate arrays (FPGAs). The graphics processing units (GPUs) accounted for 32.2% of the market in 2024.

- The graphics processing units (GPUs) segment holds the largest share in the transformer-optimized AI chip market due to their mature ecosystem, high parallelism, and proven ability to accelerate transformer-based workloads. GPUs enables massive throughput for training and inference of large language models (LLMs) and other transformer architectures, making them ideal for cloud, data center, and enterprise AI deployments. Their versatility, broad software support, and high computer density attract adoption across industries including cloud computing, autonomous systems, finance, and healthcare, positioning GPUs as the backbone for transformer-optimized AI computing.

- Manufacturers should continue optimizing GPUs for transformer workloads by improving memory bandwidth, power efficiency, and AI-specific instruction sets. Collaborations with cloud providers, AI framework developers, and data-center operators can further drive adoption, ensuring scalable performance for next-generation transformer models.

- The neural processing units (NPUs) segment, the fastest-growing in the market with 22.6% CAGR and is driven by the rising demand for specialized, energy-efficient hardware optimized for transformer-based AI at the edge and in distributed environments. NPUs provide low-latency inference, compact form factors, and high compute efficiency, making them ideal for real-time AI applications in autonomous vehicles, robotics, smart devices, and edge AI deployments. The increasing adoption of on-device AI and real-time transformer inference accelerates technological innovation and market growth for NPUs.

- Manufacturers should focus on designing NPUs with enhanced self-attention compute units, optimized memory hierarchies, and low-power operation to support edge and distribute transformer workloads. Investments in integration with mobile, automotive, and IoT platforms, along with partnerships with AI software developers, will unlock new market opportunities and further accelerate growth in the NPU segment.

Based on the performance class, the transformer-optimized AI chip market is segmented into high-performance computing (>100 TOPS), Mid-range performance (10-100 TOPS), edge/mobile performance (1-10 TOPS) and ultra-low power (<1 TOPS). The high-performance computing (>100 TOPS) segment dominated the market in 2024 with a revenue of USD 16.5 billion.

- The high-performance computing (HPC) segment (>100 TOPS) holds the largest share of 37.2% in the transformer-optimized AI chip market due to its ability to support large-scale AI model training, massive parallelism, and ultra-high throughput. HPC-class chips are critical for cloud and enterprise AI data centers where transformer-based models require tens of billions of parameters. These chips enable faster LLM training, large-batch inference, and complex multi-modal AI applications, making them indispensable for research institutions, hyperscalers, and AI-driven industries such as finance, healthcare, and scientific computing.

- Manufacturers are enhancing HPC chips with high-bandwidth memory, advanced interconnects, and optimized transformer compute units to maximize performance for large-scale AI workloads. Strategic alliances with cloud providers and AI framework developers further strengthen adoption and deployment in data-center environments.

- The edge/mobile performance segment (1–10 TOPS) is the fastest-growing segment, driven by the increasing deployment of transformer-based AI models in edge devices, mobile platforms, and IoT applications. Edge-class chips offer compact, energy-efficient compute solutions capable of real-time transformer inference, enabling low-latency AI for autonomous vehicles, smart cameras, AR/VR devices, and wearable AI systems. The rising demand for on-device intelligence, privacy-preserving AI, and distributed AI processing fuels growth in this segment.

- Manufacturers are focusing on integrating NPUs, optimized memory hierarchies, and low-power transformer acceleration into edge-class chips. Collaborations with device OEMs, AI software developers, and telecom providers are accelerating adoption, enabling real-time AI experiences and expanding the market for edge transformer-optimized hardware.

Based on the memory, the transformer-optimized AI chip market is segmented into high bandwidth memory (HBM) integrated, on-chip SRAM optimized, processing-in-memory (PIM) and distributed memory systems. The high bandwidth memory (HBM) integrated segment dominated the market in 2024 with a revenue of USD 14.7 billion.

- The high-bandwidth memory (HBM) integrated segment holds the largest share of 33.2% in the transformer-optimized AI chip market due to its ultra-fast memory access and massive bandwidth, which are critical for training and inference of transformer-based models. HBM integration minimizes memory bottlenecks, enabling HPC-class GPUs and AI accelerators to handle large-scale LLMs, multi-modal AI, and real-time analytics efficiently. Enterprises and cloud providers leverage HBM-enabled chips to accelerate AI workloads, reduce latency, and enhance overall system performance.

- The increasing deployment of HBM-integrated chips in high-performance computing environments is expanding their application across generative AI, scientific research, and large-scale data processing. By enabling faster matrix multiplications and self-attention operations, these chips support advanced AI capabilities such as multi-modal reasoning, large-scale recommendation systems, and real-time language translation.

- The processing-in-memory (PIM) segment is the fastest-growing segment with 21.5% CAGR and is driven by the need to reduce data movement and energy consumption for transformer inference, particularly in edge AI and mobile applications. PIM architectures embed compute logic directly within memory arrays, enabling real-time transformer operations with lower latency, higher energy efficiency, and reduced thermal load. This makes them ideal for autonomous systems, wearable AI platforms, and edge analytics where power and space constraints are critical.

- PIM adoption is expanding as edge AI and distributed transformer deployments increase, enabling on-device natural language processing, computer vision, and sensor-fusion applications. By combining memory and computer, PIM reduces reliance on external DRAM and enables low-latency inference for real-time decision-making, unlocking new use cases in industrial automation, smart infrastructure, and AI-enabled consumer electronics.

Based on the application, the transformer-optimized AI chip market is segmented into large language models (LLMs), computer vision transformers (ViTs), multimodal ai systems, generative ai applications and others. The large language models (LLMs) segment dominated the market in 2024 with a revenue of USD 12.1 billion.

- The large language models (LLMs) segment holds the largest share of 27.2% in the transformer-optimized AI chip market, driven by the surge in demand for generative AI, natural language understanding, and text-to-text applications. Transformer-optimized chips enable massive parallelism, high memory bandwidth, and low-latency computation, which are critical for training and deploying LLMs with billions of parameters. Cloud AI platforms, research institutions, and enterprise AI systems increasingly rely on these chips to accelerate training cycles, reduce energy consumption, and optimize inference throughput.

- LLMs are now being deployed across industries such as finance, healthcare, and customer service for applications including automated document summarization, question-answering systems, and code generation. The ability to process vast datasets in real time drives demand for transformer-optimized hardware capable of handling intensive attention and embedding operations efficiently.

- The multimodal AI systems segment is the fastest-growing with 23.1% CAGR and is fueled by the expansion of AI models capable of processing text, images, audio, and video simultaneously. Transformer-optimized chips designed for multimodal workloads provide high memory bandwidth, compute efficiency, and specialized interconnects to handle diverse data streams. These capabilities enable real-time analytics, cross-modal reasoning, and generative AI for autonomous systems, augmented reality, and interactive AI applications.

- As industries adopt multimodal AI for intelligent assistants, autonomous robots, and immersive media experiences, the need for compact, energy-efficient, and high-throughput transformer hardware increases. This growth trend highlights the shift toward integrated AI solutions capable of delivering cross-domain intelligence at the edge and in data centers, broadening the overall market opportunity.

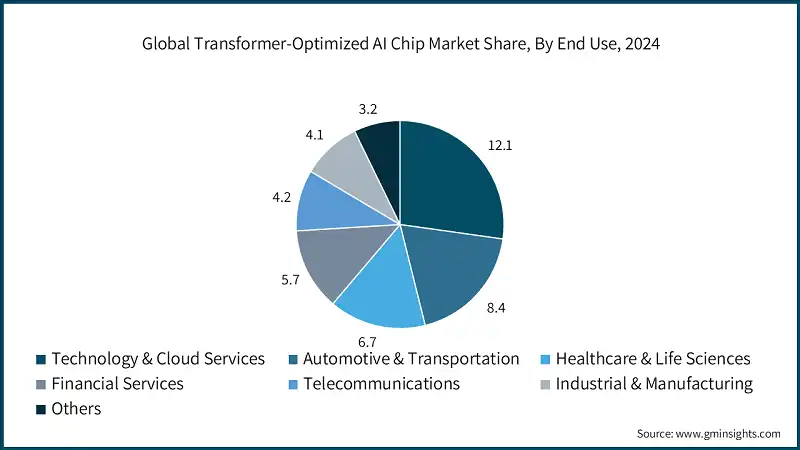

Learn more about the key segments shaping this market

Based on the end use, the transformer-optimized AI chip market is segmented into technology & cloud services, automotive & transportation, healthcare & life sciences, financial services, telecommunications, industrial & manufacturing and others. The technology & cloud services segment dominated the market in 2024 with a revenue of USD 12.1 billion.

- The technology & cloud services segment dominates the transformer-optimized AI chip market, driven by hyperscale data centers, AI research institutions, and enterprise cloud providers deploying large-scale transformer models for generative AI, search optimization, and recommendation systems. Transformer-optimized chips deliver computational density, parallel processing capability, and memory bandwidth required to train and infer massive AI workloads efficiently. Cloud leaders are leveraging these chips to reduce total cost of ownership, accelerate AI service deployment, and enhance scalability for commercial AI offerings such as large language model APIs and AI-driven SaaS platforms.

- The increasing integration of transformer-optimized accelerators into cloud infrastructure supports a broader ecosystem of AI developers and enterprises using advanced AI models for productivity, analytics, and automation. This dominance reflects the cloud sector’s central role in driving AI hardware innovation and mass adoption across global markets.

- The automotive & transportation segment is the fastest-growing with 22.6% CAGR fueled by the integration of AI-driven systems into autonomous vehicles, advanced driver-assistance systems (ADAS), and in-vehicle digital platforms. Transformer-optimized chips are increasingly used to process sensor fusion data, real-time vision perception, and natural language interfaces for human-machine interaction, enhancing vehicle intelligence and safety.

- The growing need for on-board AI inference, low-latency decision-making, and efficient model compression is propelling demand for transformer-optimized chips in this sector. As automotive OEMs and Tier-1 suppliers adopt transformer-based neural networks for predictive maintenance, situational awareness, and navigation, the segment is set to become a major contributor to next-generation mobility innovation.

Looking for region specific data?

The North America transformer-optimized AI chip market dominated with a revenue share of 40.2% in 2024.

- North America leads the transformer-optimized AI chip industry, driven by surging demand from hyperscale cloud providers, AI research institutions, and defense technology programs. The region’s robust semiconductor design ecosystem, availability of advanced foundry capabilities, and strong focus on AI infrastructure investments are key enablers of growth. Government-backed initiatives promoting domestic chip production and AI innovation, such as the CHIPS and Science Act, further accelerate market expansion across high-performance computing and data-driven industries.

- The rapid adoption of generative AI, autonomous systems, and AI-as-a-service platforms is intensifying demand for transformer-optimized accelerators. Enterprises across sectors ranging from cloud and software to healthcare and finance—are integrating these chips to enhance model training efficiency and inference scalability. North America’s well-established AI cloud infrastructure and expanding deployment of large language models continue to reinforce its dominance in this segment.

- Collaborative efforts among research institutions, AI startups, and national laboratories are advancing chip architectures optimized for transformer workloads. Regional innovation programs focusing on energy-efficient designs, chiplet integration, and edge-AI optimization supporting next-generation computing performance. With strong public-private R&D partnerships and rising demand for AI-optimized computing across commercial and defense sectors, North America is set to remain a global hub for transformer-accelerated AI technologies.

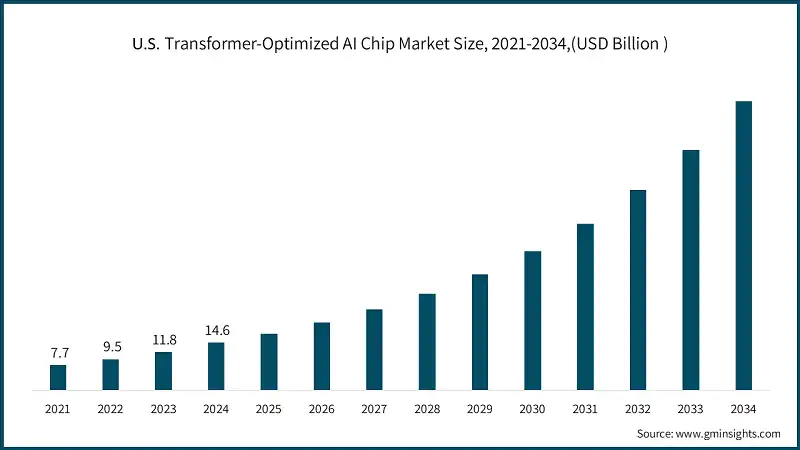

The U.S. transformer-optimized AI chip market was valued at USD 7.7 billion and USD 9.5 billion in 2021 and 2022, respectively. The market size reached USD 14.6 billion in 2024, growing from USD 11.8 billion in 2023.

- The U.S. dominates the transformer-optimized AI chip industry, driven by its unparalleled leadership in AI research, semiconductor design, and hyperscale computing infrastructure. The presence of major cloud service providers such as Amazon, Microsoft, and Google combined with advanced chip innovators and AI-focused startups, underpins large-scale adoption. Strategic government initiatives, including the CHIPS and Science Act, are strengthening domestic fabrication, R&D capabilities, and AI supply chain resilience. The U.S. continues to lead in developing transformer-based architectures powering large language models, generative AI, and enterprise-scale intelligent computing systems.

- To sustain leadership, U.S. stakeholders should prioritize developing energy-efficient, high-throughput AI chips tailored for transformer workloads. Focus areas include optimizing interconnect bandwidth, memory integration, and heterogeneous compute capabilities to meet the evolving needs of cloud and edge AI ecosystems. Expanding public-private partnerships, accelerating AI workforce development, and fostering innovation in chiplet-based and domain-specific architectures will further reinforce the U.S.’s dominance in next-generation transformer-optimized AI computing.

Europe transformer-optimized AI chip market accounted for USD 7.9 billion in 2024 and is anticipated to show lucrative growth over the forecast period.

- Europe holds a strong position supported by robust investments in semiconductor R&D, AI infrastructure, and sustainable digital transformation. Key countries such as Germany, France, and the Netherlands are leading initiatives to integrate transformer-optimized chips into data centers, autonomous systems, and industrial AI applications. The region’s strategic emphasis on sovereign computing capabilities and government-backed programs under the EU Chips Act are accelerating domestic chip production and AI innovation, strengthening Europe’s role in high-performance and energy-efficient AI computing.

- Enterprises and research institutions are increasingly adopting transformer-optimized architectures for generative AI, multimodal systems, and edge inference workloads. These chips enable efficient training and deployment of complex models in automotive, manufacturing, and smart infrastructure sectors. Collaborative efforts between AI research labs, semiconductor companies, and automotive OEMs are driving advancements in domain-specific AI accelerators and low-power transformer chips, positioning Europe as a key hub for sustainable and responsible AI hardware innovation aligned with its digital and green industrial strategy.

Germany dominates with 24.3% share of the Europe transformer-optimized AI chip market, showcasing strong growth potential.

- Germany represents a pivotal market for the transformer-optimized AI chip industry, driven by its strong industrial base, leadership in automotive innovation, and growing focus on AI-driven manufacturing and automation. The country’s strategic initiatives under the “AI Made in Germany” framework and substantial investments in semiconductor and data infrastructure are supporting the integration of transformer-based architectures in smart factories, autonomous mobility, and industrial robotics. Germany’s emphasis on technological sovereignty and digital transformation further strengthens domestic demand for high-performance, energy-efficient AI chips.

- The expanding ecosystem of automotive OEMs, industrial automation leaders, and AI research institutes in Germany is accelerating the adoption of transformer-optimized chips for real-time analytics, predictive maintenance, and generative design applications. Partnerships between semiconductor developers and automotive technology firms are advancing AI-driven control systems and edge intelligence for connected vehicles and production environments. These developments position Germany as a European frontrunner in deploying transformer-optimized AI solutions across industrial and mobility domains.

The Asia-Pacific transformer-optimized AI chip market is anticipated to grow at the highest CAGR of 21.7% during the analysis timeframe.

- Asia Pacific is emerging as the fastest-growing region in the transformer-optimized AI chip industry, driven by rapid advancements in semiconductor manufacturing, expanding AI infrastructure, and strong government support for digital transformation. Countries such as China, Japan, South Korea, and Taiwan are investing heavily in AI-accelerated computing, cloud infrastructure, and edge AI deployment. The region’s dominance in semiconductor fabrication and packaging enables cost-efficient production of transformer-optimized chips, supporting large-scale adoption across industries including consumer electronics, automotive, and telecommunications.

- The surge in AI training workloads, generative AI models, and smart device integration is propelling regional demand for high-performance transformer chips capable of handling massive data throughput with low latency. Strategic partnerships between chip manufacturers, cloud providers, and research institutions are fostering innovation in AI model acceleration, power efficiency, and memory-optimized architectures. With growing investment in national AI strategies and data center expansions, Asia Pacific is poised to become a global hub for transformer-optimized chip development and deployment across both enterprise and edge environments.

China transformer-optimized AI chip market is estimated to grow with a significant CAGR 22% from 2025 to 2034, in the Asia Pacific market.

- China is rapidly strengthening its position in the transformer-optimized AI chip industry, driven by significant government-backed initiatives, large-scale AI infrastructure investments, and an expanding ecosystem of semiconductor startups. The country’s focus on self-reliance in chip manufacturing and AI innovation, supported by programs like the “Next Generation Artificial Intelligence Development Plan,” is accelerating domestic production of transformer-optimized processors. Major technology firms such as Huawei, Baidu, and Alibaba are developing in-house AI accelerators to enhance training and inference efficiency for large language models and multimodal applications.

- The rise of AI-driven industries, including autonomous driving, smart manufacturing, and intelligent city systems, is fueling strong demand for high-performance, energy-efficient transformer chips. China’s rapidly expanding data center capacity and growing deployment of edge AI devices further strengthen market growth. The integration of advanced packaging, 3D stacking, and high-bandwidth memory technologies is enabling Chinese manufacturers to enhance computational density and cost efficiency. These factors collectively position China as a key growth engine in the global transformer-optimized AI chip ecosystem.

The Latin America transformer-optimized AI chip market was valued at approximately USD 1.9 billion in 2024, is gaining momentum due to the growing integration of AI-driven systems in data centers, cloud platforms, and industrial automation. The region’s increasing focus on digital transformation, smart manufacturing, and connected mobility is fueling demand for high-efficiency transformer-optimized processors capable of handling large-scale AI workloads.

Rising investments from global cloud providers, coupled with national initiatives promoting AI education, research, and semiconductor innovation, are further supporting market expansion. Countries such as Brazil, Mexico, and Chile are witnessing accelerated adoption of transformer chips in financial analytics, energy management, and public sector applications. Additionally, partnerships with U.S. and Asian chip developers are improving access to next-generation AI architectures, enhancing computing efficiency, and positioning Latin America as an emerging participant in the global transformer-optimized AI ecosystem.

The Middle East & Africa transformer-optimized AI chip market is projected to reach approximately USD 12 billion by 2034, driven by rising investments in AI-driven infrastructure, data centers, and smart city ecosystems. Regional governments are prioritizing AI integration in public services, autonomous transport, and defense modernization, accelerating demand for high-performance transformer-optimized processors. Expanding digital transformation programs in countries such as Saudi Arabia, the UAE, and South Africa are further fueling market growth by promoting local innovation, AI education, and partnerships with global semiconductor firms.

The UAE is poised for significant growth in the transformer-optimized AI chip market, driven by its ambitious smart city programs, strong government commitment to AI and semiconductor innovation, and substantial investments in digital and cloud infrastructure. The country is prioritizing transformer-optimized chip deployment in AI data centers, autonomous mobility platforms, and intelligent infrastructure, enabling real-time analytics, low-latency inference, and energy-efficient computation for large-scale AI workloads.

- The UAE is emerging as a key regional hub for transformer-optimized AI chips, propelled by initiatives such as the National Artificial Intelligence Strategy 2031 and the UAE Digital Government Strategy. These programs promote AI integration across public services, transport, and industrial automation, accelerating the adoption of high-performance transformer chips in enterprise, defense, and urban infrastructure applications.

- Technology firms and research institutions in the UAE are increasingly collaborating to develop localized AI computing ecosystems that leverage transformer-optimized processors for multimodal AI, NLP, and generative intelligence. The integration of these chips into hyperscale data centers and AI training clusters is enhancing performance scalability and power efficiency. Ongoing partnerships between global semiconductor vendors, local integrators, and academic research centers are fostering innovation in energy-efficient AI architectures and reinforcing the UAE’s leadership in next-generation AI hardware deployment across the Middle East.

Transformer-Optimized AI Chip Market Share

The transformer-optimized AI chip industry is witnessing rapid growth, driven by rising demand for specialized hardware capable of accelerating transformer-based models and large language models (LLMs) across AI training, inference, edge computing, and cloud applications. Leading companies such as NVIDIA Corporation, Google (Alphabet Inc.), Advanced Micro Devices (AMD), Intel Corporation, and Amazon Web Services (AWS) collectively account for over 80% of the global market. These key players are leveraging strategic collaborations with cloud service providers, AI developers, and enterprise solution providers to accelerate adoption of transformer-optimized chips across data centers, AI accelerators, and edge AI platforms. Meanwhile, emerging chip developers are innovating compact, energy-efficient, domain-specific accelerators optimized for self-attention and transformer compute patterns, enhancing computational throughput and reducing latency for real-time AI workloads.

In addition, specialized hardware companies are driving market innovation by introducing high-bandwidth memory integration, processing-in-memory (PIM), and chiplet-based architectures tailored for cloud, edge, and mobile AI applications. These firms focus on improving memory bandwidth, energy efficiency, and latency performance, enabling faster training and inference of large transformer models, multimodal AI, and distributed AI systems. Strategic partnerships with hyperscalers, AI research labs, and industrial AI adopters are accelerating adoption across diverse sectors. These initiatives are enhancing system performance, reducing operational costs, and supporting the broader deployment of transformer-optimized AI chips in next-generation intelligent computing ecosystems.

Transformer-Optimized AI Chip Market Companies

Prominent players operating in the transformer-optimized AI chip industry are as mentioned below:

- Advanced Micro Devices (AMD)

- Alibaba Group

- Amazon Web Services

- Apple Inc.

- Baidu, Inc.

- Cerebras Systems, Inc.

- Google (Alphabet Inc.)

- Groq, Inc.

- Graphcore Ltd.

- Huawei Technologies Co., Ltd.

- Intel Corporation

- Microsoft Corporation

- Mythic AI

- NVIDIA Corporation

- Qualcomm Technologies, Inc.

- Samsung Electronics Co., Ltd.

- SiMa.ai

- SambaNova Systems, Inc.

- Tenstorrent Inc.

- Tesla, Inc.

- NVIDIA Corporation (USA)

NVIDIA Corporation leads the Transformer-Optimized AI Chip Market with a market share of ~43%. The company is recognized for its GPU-based AI accelerators optimized for transformer and large language model workloads. NVIDIA leverages innovations in tensor cores, memory hierarchy, and high-bandwidth interconnects to deliver low-latency, high-throughput performance for AI training and inference. Its ecosystem of software frameworks, including CUDA and NVIDIA AI libraries, strengthens adoption across cloud data centers, enterprise AI, and edge AI deployments, solidifying its leadership position in the market.

- Google (Alphabet Inc.) (USA)

Google holds approximately 14% of the global Transformer-Optimized AI Chip Market. The company focuses on developing domain-specific AI accelerators, such as the Tensor Processing Units (TPUs), tailored for transformer models and large-scale AI workloads. Google’s chips combine high-bandwidth memory, efficient interconnects, and optimized compute patterns to accelerate training and inference in cloud and edge applications. Strategic integration with Google Cloud AI services and AI research initiatives enables scalable deployment of transformer-optimized hardware for enterprise, research, and industrial applications, enhancing the company’s market presence.

- Advanced Micro Devices (AMD) (USA)

AMD captures around 10% of the global Transformer-Optimized AI Chip Market, offering GPU and APU solutions optimized for transformer workloads and large-scale AI training. AMD focuses on high-performance computing capabilities with high-bandwidth memory and multi-die chiplet integration to deliver efficient, low-latency processing. Its collaboration with cloud providers, AI software developers, and enterprise customers enables deployment in data centers, AI research, and edge systems. AMD’s innovation in scalable architectures, memory optimization, and energy-efficient design strengthens its competitive position in the transformer-optimized AI chip space.

Transformer-Optimized AI Chip Industry News

- In April 2025, Google LLC announced its seventh-generation TPU, codenamed “Ironwood”, a transformer-optimized AI accelerator designed specifically for inference workloads and large-scale model serving with extremely high compute and bandwidth. Google emphasized that Ironwood significantly reduces latency for real-time AI applications, including natural language processing and recommendation engines. TPU also incorporates improved memory management and model parallelism features, allowing organizations to deploy larger transformer models efficiently on Google Cloud.

- In June 2024, Advanced Micro Devices, Inc. (AMD) revealed its expanded AI-accelerator roadmap at Computex 2024, introducing the Instinct MI325X (and previewing MI350) accelerators with very high memory bandwidth and performance designed for next-generation AI workloads including transformer models. AMD noted that these accelerators are optimized for heterogeneous compute environments, leveraging both GPU and AI-dedicated cores to accelerate training and inference. The company also highlighted their energy-efficient design, enabling data centers and edge deployments to run large transformer workloads with lower power consumption.

- In March 2024, NVIDIA Corporation unveiled its new Blackwell family of AI processors during GTC, each chip packing more than 200 billion transistors and aimed at keeping pace with generative-AI demand and transformer-model acceleration. The Blackwell chips feature enhanced tensor cores and higher memory bandwidth, enabling faster training of large language models. NVIDIA highlighted that these processors also support mixed-precision compute and advanced sparsity techniques, optimizing both performance and energy efficiency for cloud and enterprise AI workloads.

The transformer-optimized AI chip market research report includes in-depth coverage of the industry with estimates and forecast in terms of revenue in USD Billion from 2021 – 2034 for the following segments:

Market, By Chip Type

- Neural Processing Units (NPUs)

- Graphics Processing Units (GPUs)

- Tensor Processing Units (TPUs)

- Application-Specific Integrated Circuits (ASICs)

- Field-Programmable Gate Arrays (FPGAs)

Market, By Performance Class

- High-Performance Computing (>100 TOPS)

- Mid-Range Performance (10-100 TOPS)

- Edge/Mobile Performance (1-10 TOPS)

- Ultra-Low Power (<1 TOPS)

Market, By Memory

- High Bandwidth Memory (HBM) Integrated

- On-Chip SRAM Optimized

- Processing-in-Memory (PIM)

- Distributed Memory Systems

Market, By Application

- Large Language Models (LLMs)

- Computer Vision Transformers (ViTs)

- Multimodal AI Systems

- Generative AI Applications

- Others

Market, By End Use

- Technology & Cloud Services

- Automotive & Transportation

- Healthcare & Life Sciences

- Financial Services

- Telecommunications

- Industrial & Manufacturing

- Others

The above information is provided for the following regions and countries:

- North America

- U.S.

- Canada

- Europe

- Germany

- UK

- France

- Spain

- Italy

- Netherlands

- Asia Pacific

- China

- India

- Japan

- Australia

- South Korea

- Latin America

- Brazil

- Mexico

- Argentina

- Middle East and Africa

- South Africa

- Saudi Arabia

- UAE

Frequently Asked Question(FAQ) :

Who are the key players in the transformer-optimized AI chip market?

Key players include Advanced Micro Devices (AMD), Alibaba Group, Amazon Web Services, Apple Inc., Baidu Inc., Cerebras Systems Inc., Google (Alphabet Inc.), Groq Inc., Graphcore Ltd., Huawei Technologies Co. Ltd., Intel Corporation, Microsoft Corporation, Mythic AI, NVIDIA Corporation, Qualcomm Technologies Inc., Samsung Electronics Co. Ltd., SiMa.ai, SambaNova Systems Inc., Tenstorrent Inc., and Tesla Inc.

What are the upcoming trends in the transformer-optimized AI chip market?

Key trends include shift to domain-specific AI accelerators, chiplet integration with high-bandwidth memory, processing-in-memory architectures, and deployment of low-power chips for edge transformer inference and real-time AI workloads.

Which region leads the transformer-optimized AI chip market?

North America held 40.2% share in 2024, driven by hyperscale cloud providers, advanced semiconductor R&D ecosystem, and government initiatives like the CHIPS and Science Act.

What is the growth outlook for Neural Processing Units from 2025 to 2034?

Neural processing units (NPUs) are projected to grow at 22.6% CAGR through 2034, supported by demand for energy-efficient hardware optimized for edge and distributed transformer deployments.

What was the valuation of the high-performance computing segment in 2024?

High-performance computing (>100 TOPS) held 37.2% market share and generated USD 16.5 billion in 2024, supporting large-scale AI model training and massive parallelism.

What is the market size of transformer-optimized AI chips in 2024?

The market size was USD 44.3 billion in 2024, with a CAGR of 20.2% expected through 2034 driven by rising demand for hardware that accelerates transformer models and large language models.

How much revenue did the GPUs segment generate in 2024?

Graphics processing units (GPUs) dominated the market with 32.2% share in 2024, led by high parallelism and proven ability to accelerate transformer-based workloads.

What is the projected value of the transformer-optimized AI chip market by 2034?

The transformer-optimized AI chip market is expected to reach USD 278.2 billion by 2034, propelled by expansion of LLMs, generative AI applications, and domain-specific accelerator adoption.

What is the current transformer-optimized AI chip market size in 2025?

The market size is projected to reach USD 53 billion in 2025.

Transformer-Optimized AI Chip Market Scope

Related Reports